Article

Slicing Up Binary Towers: Accelerating Sumcheck on GPUs

We demonstrate high-throughput binary tower sumcheck on GPUs using bit-slicing, achieving a 4x speedup over CPU baseline

11/19/24

Irreducible Team

One of Binius's key strengths is its superior efficiency in binary field arithmetic compared to prime-field arithmetic. We've validated this in both our FPGA implementation and ASIC designs. However, this advantage doesn't automatically translate to commodity platforms like GPUs, which aren't optimized for binary tower arithmetic.

While the Binius software implementation leverages SIMD CPU instructions (GF2P8MULB on x86-64 and VMULL.P8 on ARM64) for 8-bit field multiplication, Nvidia processors lack native support for binary fields.

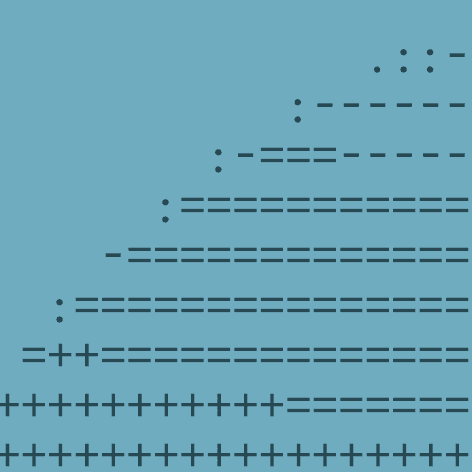

To address this, we've implemented an approach for high-throughput binary tower arithmetic on standard processors using a technique called bit-slicing. As the table shows, our GPU implementation achieved a 2.4-3.7x speedup, with the factor increasing as the number of variables grows. Moreover, we observed an 80% cost reduction compared to the CPU instance. Given that sumcheck is the main computational bottleneck of Binius, these results suggest a path to making Binius 5x cheaper for client-side proving.

*Speedup = CPU Time / GPU Time

**Cost Savings based on the per hour, on-demand prices of g2-standard-8 (L4 GPU with 24G VRAM) vs c3-standard-22 GCP instances

Understanding Sumcheck

Sumcheck is a fundamental primitive in many zero-knowledge proof systems, including next-generation systems like Binius. It’s an interactive protocol that enables a prover to convince a verifier about the correctness of a polynomial’s sum evaluated over all possible binary inputs, without the verifier performing the entire computation.

For a polynomial P(x₁, x₂, ..., xₙ) with n variables, computing its sum over all 2ⁿ possible binary inputs would be exponentially expensive. Sumcheck dramatically reduces the verifier’s computational burden to O(n) communication and computation.

Sumcheck is the primary computational bottleneck in Binius proof generation, so accelerating this algorithm directly leads to higher transaction throughput for ZK-based blockchain scaling solutions.

Accelerating Binary Field Operations

We’ll describe a simplified case of sumcheck where the polynomial P(x₁, x₂, ..., xₙ) is the product of d other n-variate polynomials, which are multilinear. Multinear polynomials can be represented by column vectors of 128-bit field elements with height 2ⁿ, and so their product can be represented by a 2ⁿ-column by d-row table.

The sumcheck protocol proceeds through n rounds of interaction between the prover and verifier. In each round, the prover

splits the table into two tables,

extrapolates the two tables to generate d-1 temporary tables (O(d 2ⁿ) multiplications),

computes products across the rows of the tables (O(d 2ⁿ) multiplications),

sums these products, and

folds the original table in half with a verifier-issued random challenge ((O(2ⁿ) multiplications).

Running sumcheck on a polynomial with 20 variables and degree 3, typical for our benchmarks, requires over 2 million multiplications. Fortunately, the expensive multiplications required in steps 2, 3, and 5 have an embarrassingly parallel structure. Our goal is to maximize GPU-enabled parallel computation of these multiplications using bit-slicing.

Bit-Slicing Explained

Bit-slicing is a data reorganization technique to maximize parallel computation. It’s been applied to the Advanced Encryption Standard (AES) and for multiplying polynomials with binary-field coefficients, as described in Ben-Sasson et al.’s paper Fast Multiplication in Binary Fields on GPUs via Register Cache (2016). We adapted the technique for large fields in the Fan-Paar Binary Tower construction, which has a lower computational cost natively than the fields used in this 2016 paper.

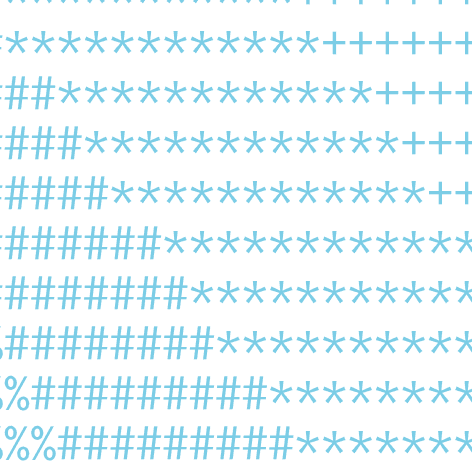

To understand bit-slicing, imagine we have the following binary numbers:

In a standard layout, these numbers would be stored consecutively in memory. Multiplying the binary field elements that they represent would involve shifting and interleaving bits, which adds overhead in terms of the required number of instruction cycles. However, in a bit-sliced or strided format, the numbers can be rearranged like so

*Bit position 1: 0011; Bit position 2: 1010; Bit position 3: 0110; Bit position 4: 1101

Rather than handling each binary number one by one, we can perform operations like XOR or AND on all corresponding bits from each position simultaneously.

For a more technical explanation, let’s consider two 128-bit tower field values x and y. In a standard layout, these 128-bit integers would be represented consecutively in memory, occupying 4 consecutive 32-bit registers.

But what happens if we want to do 32 of these multiplications? Suppose we want to know the products (x_0*y_0, x_1*y_1…,x_31*y_31). A single-threaded layout requires costly multiplication, shifting and interleaving steps 32 times. With bit-slicing, however, we can store 32 128-bit values {x_0…x_31} using 128 32-bit registers.

*In register j of block_a and block_b, we store the jth bit of a_i and b_i, respectively, for each i in {0,1…31}

This approach means that the bits we want to operate on are perfectly aligned for parallel processing. Each register contains bits from 32 different field elements at the same position. For example, register 0 (a[0] and b[0] above) contains bit 0 from 32 different field elements. Because ANDs and XORs can only act on operands in the same bit positions, we can compute the product of the two 128-register blocks (block_a and block_b) by running a simple circuit on each of our strided values in parallel. In doing this, we get 32 products in parallel.

GPU Parallelism

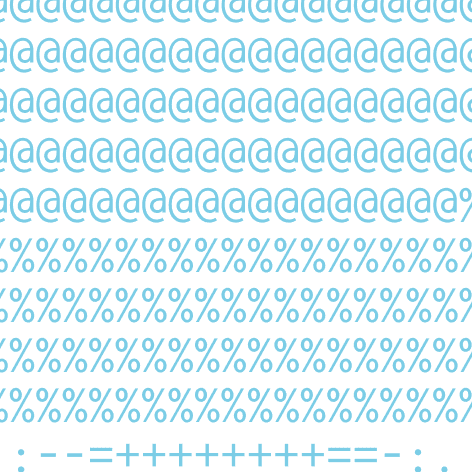

Bit-slicing enables us to use GPUs for the most expensive parts of sumcheck. We will now exhibit how step 3 of the sumcheck protocol benefits from GPU parallelism. In the diagram below, each cell in columns a, b, and c represents a 128-bit element of the Fan-Paar binary field tower, and P is the product of all elements in each row. We compute each consecutive batch of 32 rows to get the 32 values of column P, running identical code in each GPU kernel thread. The P values are then summed to get the final value S (the prover’s message to the verifier), optimized using CUDA’s atomic instructions.

The entire process benefits from the fact that we can sum all values stored across many 128-register blocks without un-bitslicing our data until we get the final accumulated block. Un-bitslicing this single block is only necessary once per round in the interactive process (20-28 times in total), minimizing performance cost. It’s worth noting that the folding process (that occurs between rounds) also benefits from GPU parallelization, as it involves a slew of parallel multiplications between our columns and random challenges selected by the verifier.

Conclusion

At Irreducible, we’re optimizing Binius for both custom FPGA-based servers and client-side proving hardware. Given that sumcheck is the main computational bottleneck for proof generation, our results demonstrate that Binius can run performantly and cost-effectively on consumer-grade GPUs. This lowers the cost of participation for blockchain network provers, reducing centralization risks, and enables client-side-specific ZKP use cases like selective data disclosure for digital identification documents.

We have published our implementation in a new binius-gpu repository for the ZK community to study and build on.

Thanks to Fawad Haider for discussions and inspiration regarding bit-slicing.