Article

Integer Multiplication in Binius

We discuss a GKR-based approach

8/13/24

Irreducible Team

Unsigned integer multiplication is a crucial task in modern zkVM design. While multiplication in the prime-field setting is more or less well-trod, this task's binary-field analog is less explored and has long been thought difficult. Our paper Succinct Arguments over Towers of Binary Fields proposed a solution based on lookups (see Section 5.3 of that work). The elimination of that technique's non-constant commitment cost was left as an open problem.

Prime-field SNARKs implement integer multiplication by combining native multiplication with padding and range-checks. Our paper [DP23] describes a lookup-based approach that decomposes its arguments into fixed-width limbs, and executes the schoolbook algorithm row-by-row. It handles each limb-by-limb product using a lookup (based on our binary-friendly Lasso variant, e.g.). It combines the results of these limb-by-limb products using a further gadget, itself designed to emulate a ripple-carry adder.

In this post, we describe a further approach we've developed internally at Irreducible. The idea is surprising: we work "in the exponent." In other words, to check whether 32-bit integers \(A\) and \(B\) satisfy \(A \cdot B \stackrel{?}= C\), let's say, it's enough to fix a multiplicative generator \(g \in \mathbb{F}_{2^{64}}^*\), and check whether \(\left( g^A \right)^B \stackrel{?}= g^C\) holds in \(\mathbb{F}_{2^{64}}^*\). (The edge case in which \(A = B = 0\) and \(C = 2^{64} - 1\) can be ruled out explicitly, as we explain below.) Of course, exponentiation is not algebraic, and can't be captured using simple constraints. The point of this post is to describe how exponentiation can be captured with the aid of a sequence of polynomial constraints, itself amenable to the GKR protocol. We note that this post's approach actually uses nondeterminism; that is, it requires that the prover commit to \(C\). It thus contrasts with [DP23]'s approach, which "computes" \(C\).

The Prime-Field Case

In large prime fields \(\mathbb{F}_p \cong \{0, \ldots , p - 1\}\), multiplication "looks like" integer multiplication, provided no wraparound occurs. Most SNARKs emulate integer multiplication modulo a power-of-two bitwidth \(2^n\), say, by storing each pair of operands \(x\) and \(y\) in \(\{0, \ldots , 2^n - 1\}\) as \(\mathbb{F}_p\)-elements. If \(n\) is sufficiently small with respect to \(p\), then not only will \(x\) and \(y\) fit without wraparound, but their product also will. Provided that \(x\) and \(y\) can be shown to indeed reside in the range \(\{0, \ldots , 2^n - 1\} \subset \{0, \ldots , p - 1\}\), then, and provided that reduction of \(x \cdot y\) modulo \(2^n\) can be dealt with, we essentially have our solution.

The cost of doing this, of course, is that range-checks require an expensive permutation argument.

Lookup-Based Approach from [DP23]

Binius's original approach breaks its arguments \(x\) and \(y\)—which are natively elements of some tower, say \(\mathcal{T}_5 \cong \mathbb{F}_{2^{32}}\)—into limbs of fixed width, say 8 bits. By performing as many lookups as there are limbs per argument (so 4 in our case), that approach manages to fully "populate" the diagonal array of limb-products called for by the schoolbook algorithm. It finally combines those rows using an addition gadget.

While that approach is asymptotically solid—since it only requires a linear number of lookups and additions in the number of limbs per word—its concrete cost seems to be rather high. It also requires a linear number of auxiliary commitments in the number of limbs per word.

Multiplication in the Exponent via GKR

Our new approach requires just a single auxiliary commitment. It also requires a linear number of sumchecks in the bit-width of the multiplicands. It seems that this will lead to a practically more efficient protocol in a number of situations of practical interest: for example, when sumchecks are relatively cheap compared to commitments.

We describe our new approach now. Let's fix a bit-width \(n\) (e.g. 32 say). Let's assume that we have committed columns \(\left( a_0(v), \ldots , a_{n - 1}(v), b_0(v), \ldots , b_{n - 1}(v) \right)_{v \in \mathcal{B}_\ell}\), and a third, \(2 \cdot n\)-wide chunk of columns \(\left( c_0(v), \ldots , c_{2 \cdot n - 1}(v) \right)_{v \in \mathcal{B}_\ell}\). Each column is defined over \(\mathbb{F}_2\) and is of height \(2^\ell\).

Let's write \(A\), \(B\), and \(C\) for the row-wise integers represented implicitly by these bits; i.e., for each \(v \in \mathcal{B}_\ell\), we have \(A(v) := \sum_{i = 0}^{n - 1} 2^i \cdot a_i(v)\), \(B(v) := \sum_{i = 0}^{n - 1} 2^i \cdot b_i(v)\), and \(C(v) := \sum_{i = 0}^{2 \cdot n - 1} 2^i \cdot c_i(v)\). We're interested in checking whether, for each \(v \in \mathcal{B}_\ell\), \(A(v) \cdot B(v) \stackrel{?}= C(v)\) as integers.

Let's assume that \(2 \cdot n\) is a power of 2, \(2 \cdot n = 2^\iota\), say. We write \(g \in \mathcal{T}_\iota^*\) for a multiplicative generator. We will suggest a way to achieve this which relies on the following subprotcols:

checking that a committed \(\mathcal{T}_\iota\)-column \(V \in \mathcal{T}_\iota^{\mathcal{B}_\ell}\) is given pointwise as \(V(v) = g^{A(v)} = g^{\sum_{i = 0}^{n - 1} 2^i \cdot a_i(v)}\).

checking that given a committed column \(V\), the further virtual column \(W(v) \in \mathcal{T}_\iota^{\mathcal{B}_\ell}\) is given pointwise as \(W(v) = V(v)^{B(v)} = V(v)^{\sum_{i = 0}^{n - 1} b_i(v)}\).

By putting these two ideas together, we will be able to get what we want. The idea is this: the prover will have to commit to the single auxiliary column \(\left( V(v) \right)_{v \in \mathcal{B}_\ell}\), as above. By applying 1, the verifier can check the row-wise validity of \(V = g^A\). By applying 2, the verifier can virtually materialize \(W = V^B\). Finally, by applying 1 again—this time double-width—to \(C(v)\), the verifier can virtually materialize the further column \(X = g^C\). Since \(W\) and \(X\) are multilinear, the verifier can check the identical equality between \(W\) and \(X\) by uniformly sampling a point \(r \gets \mathcal{T}_\tau^\ell\) and requiring \(W(r) \stackrel{?}= X(r)\).

Remark: Why not just use the protocol 2. alone, since 1. is a special case of it? As we will see below, we are able to do 1 more efficiently than we can do 2.

Exponentiation of the generator by a chunk of bit-columns

The first protocol I'll describe is 1. Here is the idea. Let's again fix \(\left( a_0(v), \ldots , a_{n - 1}(v) \right)_{v \in \mathcal{B}_\ell}\) as above. From this, we can describe a sequence of multilinears:

$$ \begin{align*} V_0(X) &= 1 - a_0(X) + a_0(X) \cdot g \\ V_1(X) &= \sum_{v \in \mathcal{B}_\ell} \widetilde{\texttt{eq}}(v, X) \cdot V_0(v) \cdot \left(1 - a_1(v) + a_1(v) \cdot g^2 \right) \\ \vdots \\ V_{n - 1}(X) &= \sum_{v \in \mathcal{B}_\ell} \widetilde{\texttt{eq}}(v, X) \cdot V_{n - 2}(v) \cdot \left( 1 - a_{n - 1}(v) + a_{n - 1}(v) \cdot g^{2^{n - 1}} \right). \end{align*} $$

I claim that, for each \(v \in \mathcal{B}_\ell\), we have the pointwise equality \(V_{n - 1}(v) = g^{A(v)} = g^{\sum_{i = 0}^{n - 1} 2^i \cdot a_i(v)}\). Indeed, inductively, we see that, for each \(i \in \{1, \ldots , n - 1\}\) and each \(v \in \mathcal{B}_\ell\), \(V_i(v) = V_{i - 1}(v) \cdot \left( g^{2^i} \right)^{a_i(v)}\), while \(V_0(v) = g^{a_0(v)}\). Unwinding this, we see that \(V_{n - 1}(v) = g^{2^{n - 1} \cdot a_{n - 1}(v)} \cdot \cdots \cdot g^{a_0(v)} = g^{\sum_{i = 0}^{n - 1} 2^i \cdot a_i(v)}\), which is what we want.

Given an initial claim \(V_{n - 1}(r_{n - 1}) \stackrel{?}= v_{n - 1}\), it is fairly self-evident that we can use GKR on this. Indeed, for each \(i \in \{1, \ldots , n - 1\}\), given a claim of the form \(V_{i}(r_i) \stackrel{?}= v_i\), we can run a sumcheck on the degree-three composition of multilinears \(h_i(X) = \widetilde{\texttt{eq}}(X, r_i) \cdot V_{i - 1}(X) \cdot \left( 1 - a_i(X) + a_i(X) \cdot g^{2^i} \right)\). After an \(\ell\)-round sumcheck, the claim will be reduced to one of the form \(h_i(r'_i) \stackrel{?}= v'_i\). After explicitly querying \(a_i(r'_i)\) and running some local algebraic manipulations, the verifier will be able to reduce this claim in turn to one of the form \(V_{i - 1}(r_{i - 1}) \stackrel{?}= v_{i - 1}\), where in fact here \(r_{i - 1} := r'_i\).

The case of \(V_0(r_0)\) is exceptional; since this thing is just a simple multilinear (as opposed to a sum over the cube of a composition of multilinears), it can just be evaluated directly by the verifier after doing one query to \(a_0\).

So assuming that we have a committed column \(\left( V(v) \right)_{v \in \mathcal{B}_\ell}\), which is purported to equal \(V_{n - 1}\) pointwise, the verifier can first sample \(r_{n - 1} \gets \mathcal{T}_\tau^\ell\), directly query \(v_{n - 1} := V(r_{n - 1})\), and then finally run the GKR-based protocol to check whether \(V_{n - 1}(r_{n - 1}) \stackrel{?}= v_{n - 1}\).

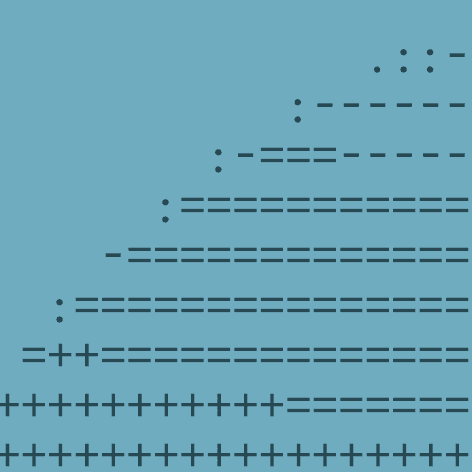

This strategy is depicted graphically in the below circuit.

Note that each "multiplexer" can be handled algebraically, using the expression \(1 - a_i(X) + a_i(X) \cdot g^{2^i}\). The products are just standard products. Importantly, each \(V_i(X)\) is "multilinearized," using a standard sum with an equality indicator.

Exponentiation of one column by a chunk of further bit-columns

The above technique relied on the fact that the base \(g\) was a constant, and that the verifier can locally precompute and store the constants \(g, g^2, \ldots, g^{2^{n - 1}}\). It's a bit tricker when the base is itself a variable column. If we try to reuse the above idea, then the virtual base column will be raised to enormous degrees.

I will describe a way to do this which relies on the reverse, Horner-like method of square-and-multiply. The identity that we care about is that, for some \(\mathcal{T}_\iota\) column \(\left( V(v) \right)_{v \in \mathcal{B}_\ell}\) and further bit-columns \(\left( b_0(v), \ldots , b_{n - 1}(v) \right)_{v \in \mathcal{B}_\ell}\), we should have \(W(v) \stackrel{?}= V(v)^{\sum_{i = 0}^{n - 1} 2^i \cdot b_i(v)}\) for each \(v \in \mathcal{B}_\ell\). We are going to use the fact that, for each \(v \in \mathcal{B}_\ell\), \(V(v)^{\sum_{i = 0}^{n - 1} 2^i \cdot b_i(v)} = \left( \left( \cdots \left( V(v)^{b_{31}(v)} \right)^2 \cdots \right)^2 \cdot V(v)^{b_1(v)} \right)^2 \cdot V(v)^{b_0(v)}\).

So the chain of multilinears is like this:

$$ \begin{align*} W_0(X) &= \sum_{v \in \mathcal{B}_\ell} \widetilde{\texttt{eq}}(v, X) \cdot \left( 1 - b_{n - 1}(v) + b_{n - 1}(v) \cdot V(v) \right) \\ W_1(X) &= \sum_{v \in \mathcal{B}_\ell} \widetilde{\texttt{eq}}(v, X) \cdot \left( W_0(v) \right)^2 \cdot \left(1 - b_{n - 2}(v) + b_{n - 2}(v) \cdot V(v) \right) \\ \vdots \\ W_{n - 1}(X) &= \sum_{v \in \mathcal{B}_\ell} \widetilde{\texttt{eq}}(v, X) \cdot \left( W_{n - 2}(v) \right)^2 \cdot \left( 1 - b_0(v) + b_0(v) \cdot V(v) \right). \end{align*} $$

The correctness again is self-evident from the above discussion. Note that the \(b_i\)s are consumed in the opposite direction as they were before.

What about evaluation? Now, each of these things is a degree-five (!) composition of multilinears (except for \(W_0(X)\), which is of degree three). So as above, for any \(i \in \{1, \ldots , n - 1\}\), given a claim of the form \(W_i(r_i) \stackrel{?}= w_i\), we can run a sumcheck on the degree-five polynomial \(h_i(X) = \widetilde{\texttt{eq}}(X, r_i) \cdot \left( W_{i - 1}(X) \right)^2 \cdot \left( 1 - b_{n - 1 - i}(X) + b_{n - 1 - i}(X) \cdot V(X) \right)\). At the end, we will have a claim of the form \(h_i(r'_i) \stackrel{?}= w'_i\). At the cost of directly querying \(b_{n - 1 - i}(r'_i)\) and \(V(r'_i)\), the verifier can reduce this to a claim of the form \(W_{i - 1}(r'_i)^2 \stackrel{?}= w''_i\). Though the verifier could presumably compute \(w_{i - 1} := \sqrt{w''_i}\) locally, we could alternately ask the prover to nondeterministically supply this value. (In characteristic 2, square-roots always exist and are unique.) In any case, we thus again get a reduction to \(W_{i - 1}(r_{i - 1}) \stackrel{?}= w_{i - 1}\), as required.

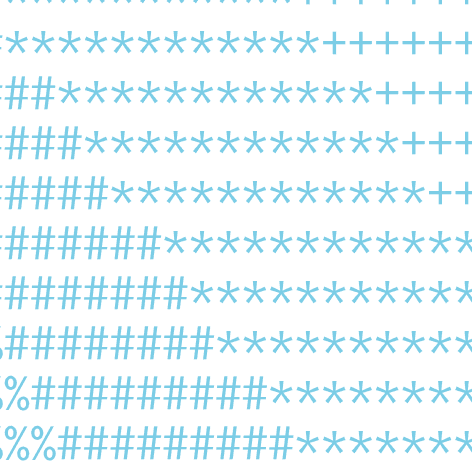

This GKR circuit is depicted again below.

Note that now, the base \(V(X)\) is being fed as an additional input into the circuit, and is wired into each of the multiplexers. Moreover, each successive \(W_i(X)\) needs to go through a squaring gate before it is multiplied into the next multiplexer output.

Remark: Of course, we could have used this idea in the first case—with the constant function \(v \mapsto g\) in place of \(v \mapsto V(v)\). But then our degrees of the \(V_i(X)\)s would have been four, instead of three.

Putting it together

By combining these ideas, we can get a protocol for checking \(A(v) \cdot B(v) \stackrel{?}= C(v)\). The prover has to commit to the auxiliary column \(\left( V(v) \right)_{v \in \mathcal{B}_\ell} := \left( g^{\sum_{i = 0}^{n - 1} 2^i \cdot a_i(v)} \right)_{v \in \mathcal{B}_\ell}\). Let's write also \(X_0(X), \ldots , X_{2 \cdot n - 1}(X)\) for the result of exponentiating the generator by the chunk \(\left( c_0(v), \ldots , c_{2 \cdot n - 1}(v) \right)_{v \in \mathcal{B}_\ell}\), as in the protocol for 1. Then, the verifier may sample a random element \(r_{2 \cdot n - 1} \gets \mathcal{T}_\tau^\ell\); the verifier's goal is to establish that \(X_{2 \cdot n - 1}(r_{2 \cdot n - 1}) \stackrel{?}= W_{n - 1}(r_{2 \cdot n - 1})\). The prover can nondeterministically send the verifier a claimed value \(x_{2 \cdot n - 1}\) for these respective two sides; at this point, the verifier can independently kick off the two above GKR protocols "in parallel." At the end of the \(n - 1^{\text{th}}\) round of the protocol for the \(W\)s, the verifier will need to check whether \(w'_0 \stackrel{?}= h_0(r'_0) = \widetilde{\texttt{eq}}(r'_0, r_0) \cdot (1 - b_{n - 1}(r'_0) + b_{n - 1}(r'_0) \cdot V(r'_0))\). After evaluating \(b_{n - 1}(r'_0)\) and \(V(r'_0)\) in the usual way, the verifier can check this claim.

We can then reuse the random evaluation point \(r'_0\) as \(r_{n - 1}\) for the next GKR chain; i.e., to kick off the chain using the claim \(V_{n - 1}(r_{n - 1}) \stackrel{?}= V(r_{n - 1})\). The value of the right-hand side, which the verifier just learned by direct query, thus gives the initial evaluation claim for \(V_{n - 1}(r_{n - 1})\), which gives us what we need to kick off the second half of the chain. (This trick saves the verifier a single query; i.e., that of \(V(r_{n - 1})\) for a freshly and independently sampled \(r_{n - 1}\).)

Handling wraparound modulo \(2^{2 \cdot n} - 1\)

The above protocol only shows that \(A(v) \cdot B(v) \stackrel{?}\equiv C(v) \mod{2^{2 \cdot n} - 1}\), since \(|\mathcal{T}_\iota^*| = 2^{2 \cdot n} - 1\). Since \(A(v) \cdot B(v) \leq 2^{2 \cdot n} - 2^{n + 1} + 1 < 2^{2 \cdot n} - 1\), the only way for this equality to hold modulo \(2^{2 \cdot n} - 1\) but not over the integers is if we have the combination of values \(A(v) = 0\), \(B(v) = 0\), and \(C(v) = 2^{2 \cdot n} - 1\).

To exclude this combination of values, I will use a trick suggested to us by Angus Gruen from Polygon Zero. His observation is that, to rule the above case out, it's a fortiori enough to show that \(A(v) \cdot B(v) \stackrel{?}\equiv C(v) \mod{2}\) holds. This latter requirement, in turn, is checkable using a single \(\mathbb{F}_2\)-constraint, namely \(a_0(v) \cdot b_0(v) - c_0(v) = 0\) (i.e., we can pay attention only to the three numbers' respective least-significant bits). This thing will be extremely easy to zerocheck.

There is another way of expressing Angus' idea. If we check that the modular equalities \(A(v) \cdot B(v) \stackrel{?}\equiv C(v) \mod{2^{2 \cdot n} - 1}\) and \(A(v) \cdot B(v) \stackrel{?}\equiv C(v) \mod{2}\) both hold, we're effectively checking whether \(A(v) \cdot B(v) \stackrel{?}\equiv C(v) \mod{2 \cdot (2^{2 \cdot n} - 1)}\), by Chinese remainder. Since both sides \(A(v) \cdot B(v)\) and \(C(v)\) are at most \(2^{2 \cdot n} - 1 < 2 \cdot (2^{2 \cdot n} - 1)\), we know that the modular equality must lift to an integer equality.