Article

The Need for High-Performance Zero Knowledge Proving

ZKP requires high-performance compute to bring cost-effective, real-time transaction validation to web3.

6/5/24

Irreducible Team

TL;DR

Improvements in performance and cost-efficiency of information technology systems have historically driven widespread adoption and market growth of IT services and products.

Zero-knowledge proving is key to scaling Ethereum in the short term and realizing future applications of verifiable computation for a broad and robust range of verticals, including the Internet of Things, artificial intelligence, and the verifiable internet.

To match (and surpass) the high user experience and performance benchmarks set by the “web2” Internet, ZKP must provide blockchain protocols with cost-effective, real-time transaction validation.

This demands high-performance compute leveraging massively parallel processing, acceleration systems with direct memory access, and application-specific hardware.

The value of information technology has always hinged on performance and cost. How much use we derived from IT services and products initially depended on limiting factors such as how quickly data could be moved or how cheaply stored. As the evolution of hardware and software over the past few decades increased performance and decreased resource cost, wholesale IT usefulness and utilization adjusted upward. Consider how today, at least a billion people globally stream high-quality Netflix movies on their mobile phones in seconds. In 1989, however, there were barely a million internet users, each unable to download a single song in less than ten minutes.

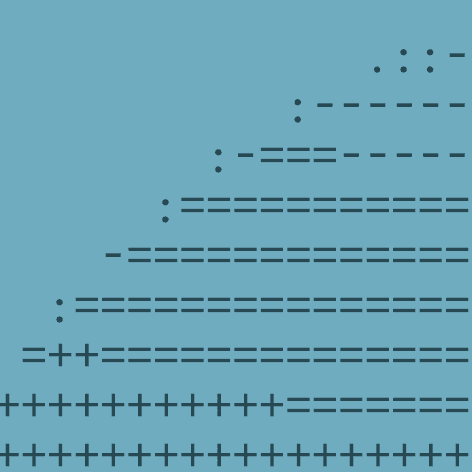

The point is well illustrated by how popular TCP/IP-based internet services have become. In the past decade total internet traffic volume has increased ~40% annually while bandwidth, the capacity at which a network can transmit data, has increased almost 900% (YoY). This market growth outstrips the corresponding decrease in broadband cost; internet traffic increased 125% from 2016 to 2021 while broadband costs on average decreased only 31%.

*Nielsen’s law of internet bandwidth and IBIS World

Similarly, as the cost of data storage has decreased 69% since 2010, there’s been a 483% increase in the annual storage capacity shipped to meet rising demand for all digital content created, captured, or replicated. This means that as cost improved by a factor of 3, demand for storage increased by 6. This trend is impressive given storage device lifetime, which could easily be several years. In fact, despite decreasing per unit costs, the global data storage market reached $212B in 2022 and is projected to grow 17.9% through 2030.

*IDC and Our World in Data

Unsurprisingly, the same theme holds true for data processing, or compute power. Moore’s law having held generally true for the past ~60 years, both enterprise-grade and retail devices are now exponentially more powerful, smaller, and cheaper. Today’s mobile phones are about 5,000 times faster than a 1980s CRAY supercomputer and affordable for the average consumer. The result is democratized access to performant compute for a broad and robust range of uses, which created sky-high mobile demand and market growth. Mobile phones account for 60% of the world’s web traffic, and the market as a whole has grown 300% annually since 2009.¹

In short, as resource capacity and performance for demand-driven IT services have adjusted upward, so has the utilization of those services. Search wouldn’t be nearly as useful at dial-up speeds. Social media wouldn’t have proliferated so extensively without cheap storage for zettabytes of content. Internet usage would be substantially lower if we couldn’t travel with it in our pockets. And because the demand for these services outpaced the corresponding decrease in resource cost, their market sizes grew.

We expect to see a similar dynamic play out in the zero-knowledge proving market. Utilization will be driven by strong demand for verifiable or private computation. In the short term, the primary use case is enabling layer 1 blockchains (mainly Ethereum) to outsource transaction processing to layer 2s, thereby scaling L1s without sacrificing consensus-based security. Unfortunately, proof generation times are still too slow for L2s to validate transactions in real-time, forcing protocols like zkSync to rely on distributed validator sets for instant confirmation. Minimizing this performance limitation via high-performance computing (HPC) is key to meeting (and surpassing) the Internet’s benchmark for high-quality UX, scalability, and interoperability. High throughput, easy-to-use platforms for digital payments and exchange-traded tokens and real-world assets are the primary drivers for short-term web3 adoption. We expect the rate of blockchain transaction throughput to outstrip the rate of decline in proof generation cost such that the market grows substantially.

For use cases like ZKP with extraordinarily high performance demands, general-purpose compute limited to sequential processing on relatively few CPUs isn’t sufficient. HPC instead pushes performance limits via massively parallel processing, low-latency components, and networked clusters of thousands of CPU cores. Accelerating ZKP to the point that layer 2 protocols can generate proofs for transaction validity in real-time requires leveraging parallel processing, high-performance acceleration systems with direct memory access (DMA), and application-specific hardware.

Massively parallel processing was first realized by the advent of graphics processing units, or GPUs (see Acquired Nvidia podcast episodes). As the name implies, they were originally designed for 3D graphics rendering and drove the explosion of PC gaming in the 1990s and early 2000s. Graphics processing is essentially lighting unique pixel vectors on a display to create an image or video. This means these independent program instructions can be executed simultaneously instead of sequentially.

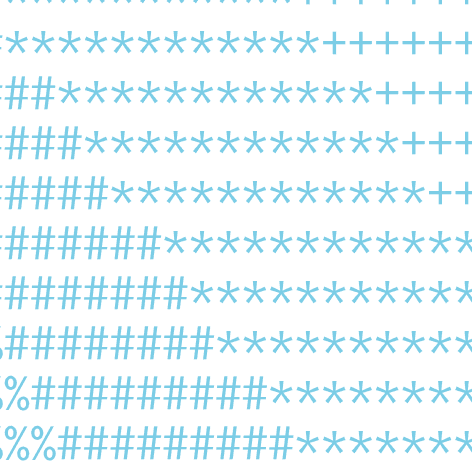

GPU-accelerated computing was quickly extrapolated to other applications that were also parallel in nature, like artificial intelligence. It directly sped up deep neural networks 10-20x by reducing each of the many training iterations from weeks to days, and acted as a major catalyst for AI services capable of real-time decision-making (like ChatGPT and self-driving vehicles). We can see below the 50x boost in the Caffe deep learning framework over only three years once Nvidia replaced CPUs with their K/M40 GPUs. This significantly outpaces Moore’s law.

Zero-knowledge proving is still early in development and has yet to standardize on proof systems or system parameters. Yet all approaches are similar in that the most computationally intensive phases of proof generation are highly parallelizable. For example, there are embarrassingly parallel algorithms to perform the multi-scalar multiplication operation (MSM), which accounts for over 80% of the computational cost of elliptic curve SNARK proving, as well as the sumcheck algorithm that dominates the computational cost of Irreducible’s Binius system. Both methods benefit significantly from parallel, multi-threaded processing.

*Nvidia

High-performance hardware accelerators with DMA enable high-throughput, low-latency information transfer between the CPU and the tailor-made accelerator. Today’s ZK protocols are mostly data-bound (except for MSM-based SNARKs, which are still compute-bound) and require acceleration systems that leverage maximum PCIe throughput to minimize data transfer times.

This is largely achieved by using sophisticated drivers and DMA controllers to adapt to how ZK proofs manage data, both in the system memory and the CPU cache. Accelerators should be able to execute native computations faster than the CPU. While they are designed for parallel execution, they must also be capable of pipelined processing, which sustains the data rate of the interfacing PCIe bus. Additionally, the server should leverage multiple accelerators so the host CPU can dispatch computations in a time-domain multiplex fashion. We recently described the significant performance benefits of using such a system to accelerate Polygon’s zkEVM layer 2. Ultimately, optimized DMA-based hardware accelerators aim to achieve maximum efficiency by matching the total PCIe throughput to the total DDR (data) throughput.

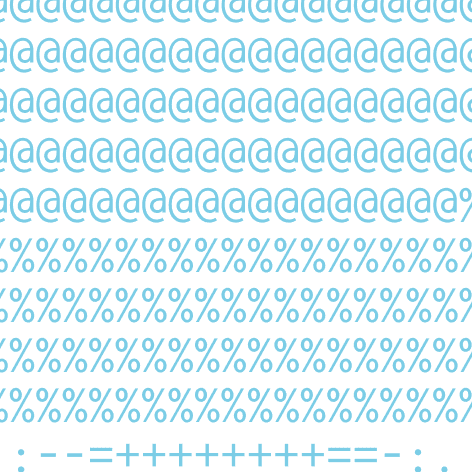

Application-specific Systems-on-Chip (SoC) have dominated the high-performance computing space in recent years. Apple introduced its M SoC series in late 2020 to efficiently tackle the wide range of MacBook workloads. These SoCs encapsulate 10x CPU, 16x GPU, and 16x NPU (AI) cores, encompassing an entire compute resource previously provided as several discrete chips. Highly integrated SoCs are not data-bound with the PCIe throughput, as are the hardware acceleration systems described in the previous section. These SoCs are compute-bound, and new product generations primarily scale the computing resources. For example, the M2 CPU is 18% faster than the M1, the GPU is 35% faster, and the neural engine is 40% faster.

Unlike ASICs, these SoCs are highly programmable and resistant to protocol changes. ZKP needs application-specific SoCs for the 100x speed improvements required for applying real-time, client-side proving to a range of verifiable compute applications across verticals, including the Internet of Things, artificial intelligence, and the verifiable internet. We project that the embedded CPU cores will have instruction sets featuring ZK-friendly instructions, unlike the commonly used x86 and ARM processors that lack enough custom instructions for prime and binary fields.

Ultimately, zero-knowledge proving is key for realizing real-time transaction validation that blockchain-based protocols need to rival and surpass the high performance and user-friendly benchmarks set by the “web2” Internet. Enabling high-throughput, low-latency, low-cost transactions requires ZKP-specific hardware and acceleration systems. Despite the resulting cost reduction for generating proofs, as the utility of decentralized networks increases, so will demand and market size for ZKP and web3 as a whole.